Understanding Machine Failures in Car Manufacturing Plants

Enable collaboration between data scientists and operators in automobile manufacturing

Understanding failure modes of major manufacturing equipment

Partnering with one of the largest European car manufacturers, we fed months of sensor data to Optimal Classification Trees to understand the mechanisms underlying failures of robotic welding guns.

Our tool was applied to several plants in Germany and averted 300+ hours of unplanned downtime per plant.

Validated by experienced engineers

In a matter of seconds, the output tree recovered failure modes that took years of experience to operators and engineers to observe and identify. The engineers on the floor carefully validated it at each node and considered it "making a lot of sense and showing some ideas I had but could not convince others of!"

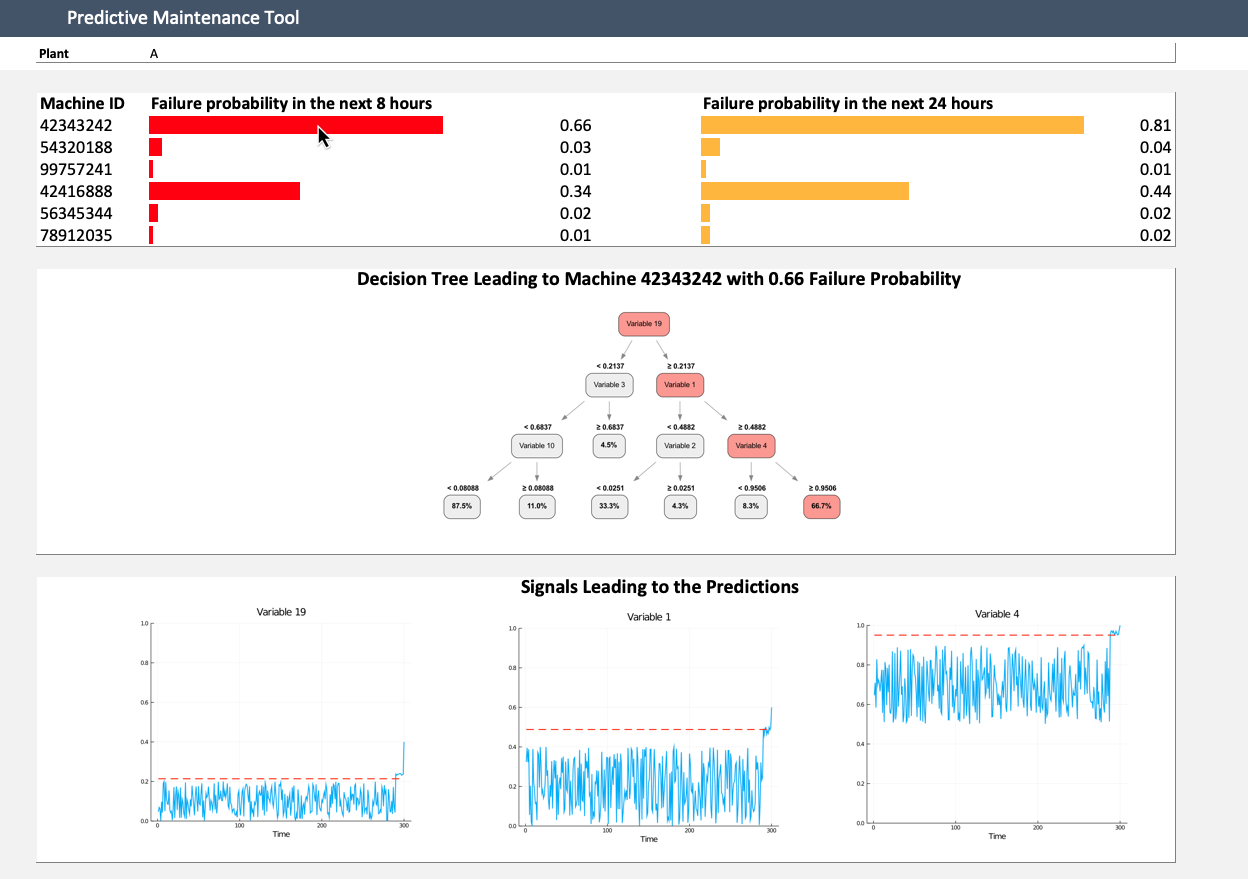

Example decision tree predicting machine failures. The tree automatically highlights two failure modes in red for further testing.

Real-time alert system to notify with evidence

From Optimal Classification Trees' output, a dashboard can be built to showcase when and why machines will fail. Machine operators are notified if a high failure probability is detected.

By displaying relevant interpretable insights relative to the prediction, our user-facing dashboard allows operators to validate and confidently act on the prediction, or override it with domain expertise.

Improving manufacturing processes

For instance, we identified that a drastic pressure build-up indicates impeding failure for certain classes of equipments. Engineers confirmed that this signal was physically meaningful, and planned on improving the pressure control to reduce the machine failure rate.

Unique Advantage

Why is the Interpretable AI solution unique?

-

Detecting interpretable paths to failure

Optimal Trees can automatically display paths to failure, featuring correlations between several machine metrics simultaneously

-

Empowering operators

If the model makes mistakes, operators can see the prediction's logic and override it instead of blindly trusting it

-

Building trust

Engineers need to understand the model's logic to be comfortable with making changes

-

Adaptable to low data availability

If easily accessible data is scarce and comes from a short time frame, interesting findings can still be found using Interpretable AI’s software modules